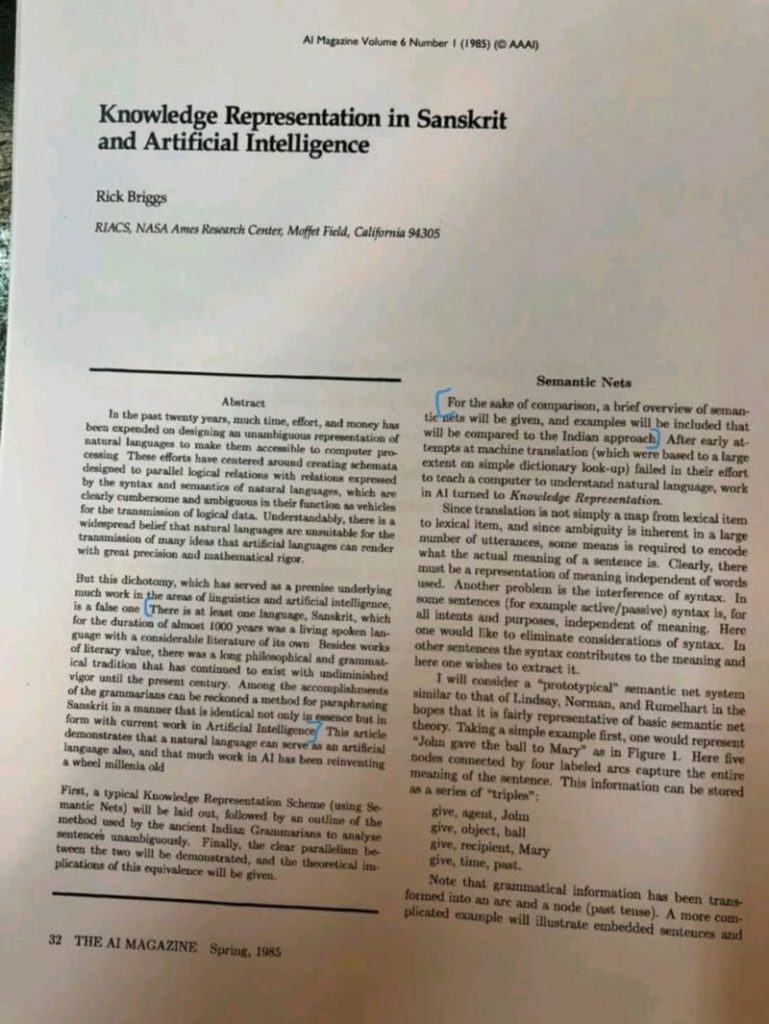

There is one social media post that keeps doing round. It says that some NASA scientist claimed Sanskrit is most suitable language for AI.People laught at it as fake news. Here is link to 1985 article that started it all.

But why is this fact not underlined loudly you may ask. Multiple reasons.

First the fact that sanskrit has oldest codifed grammer ,some 3,4 BC are attributed to Panini who worte it. The main feature of this language is that it denotes same meaning even if you put words in any sequence. This happens via reflectivity .In simple term the pre-post fixes of noun applies to verb, adjective and derived forms. This is very neat preservation of semantic meaning. For other aspects of grammer this language has very logical,maths like rule.Which feels like a programming language.

Now, this aspect of sanskrit is given mention in intro sections of big books on compilers and pasrsers.Including the lagend MIT professor Noam Chomsky whose language theory formed basis of Natural Language Processing. But world has moved of from prolog and lisp to more sophisticated models of NLP. So the early theory of the field wont get mention in new papers ,there is no motive here. Same has happened to works on compilers.

Cut to today our early NLP libraries built on work of many researchers after Chomsky and relied on typical parse-toekn-interpret sequence.

The current champion of NLP ie BERT was celebrated for bi directional application of relationship which allowed it for better correlation of words in terms of their meaning. This feat actually derives from Sanskrit but its not an exclusive feature of sanskrit.So we dont have a reason to find prejudice.Nor can one person read all research papers where it might be mentioned.

Now the next phase of evolution of NLP is to move from sentences and arrive and comprehension at higher aggregate level.Like how you and me can understand poetry in all of its abstract metaphorical erratic flow.This calls for not only processing of language in terms of words-relation-meaning but also calls for iterative dive into parallel knowledge models and alternate meaning.Think of reading a satirical poem….

When the next production strength NLP winner, will be published it will derive from the reflectivity Sanskrit has; but its mention in the research paper will depend on the prior work the researcher has referred.

So,as much good the ancient languages is the way research methodology and citation works,we dont have to always suspect motives .

ps: I have tried to give very simplified view of NLP and Sanskrit here so experts in the field should pardon the simplified version of things.

Link to original paper of the images shown here : https://ojs.aaai.org/index.php/aimagazine/article/view/466

link to bert paper https://arxiv.org/abs/1810.04805

here is excellent article on timeline of parsing as technique : https://jeffreykegler.github.io/personal/timeline_v3