What is system design in software ?

Budding architects often ask me about the way to learn System Design .That is practically to say system design has become rite to passage for Technology Architects now . But as such system design doesn’t have one clear definition .The term became popular when big tech and the copy techs started asking people to design distributed solutions . So when a budding Architect decided to learn it , he has to mix AWS certification syllabus with lots of Martin Fowler blogs on Event driven systems .Add some kubernetes to it ,top it up with kafka . Some CAP theorem and consensus algorithm will be nice to . And finally some cheat sheet like numbers a an engineers should know . There is noting wrong in learning all this stuff . But we must also acknowledge that these are disjoint knowledge items that will cognitively burden a newcomer and even make few people depressed .

A simpler learning approach

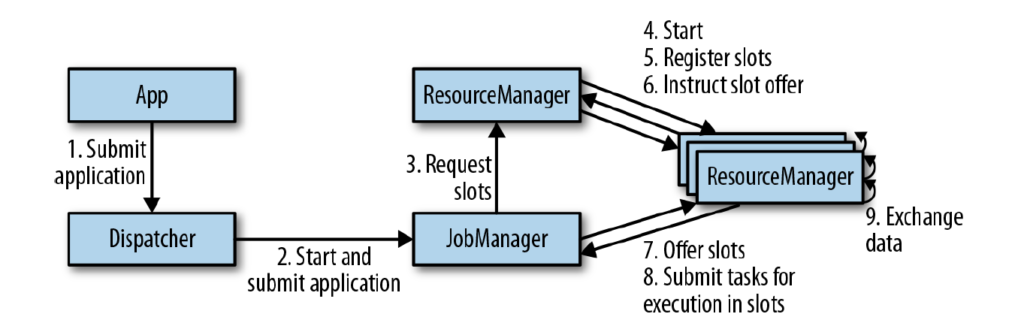

In my alternative approach ,I ask my mentees to design a complex enough software first and then scale it cloud . That is it .No kubernetes or kafka mandated . Take for example this simple diagram from Apache flink architecture . This is a very typical arrangement for hadoop based framework , mapreduce-spark-strom and so on .

Can your engineers design a robust implementation of this for a single server ? This is a fine system to design . We can always scale this to cloud .And here you will realize that many of your learning items in system designs are needed because of this movement to cloud .They are not essential part of system design . So in ideal scenario one can design pintrest for single server and learn the craft , design google drive for one server (why not design hdfs ? even wondered why its not popular question ?) and master the complexities . The scaling to internet can come at a slow measured pace .

This also bring me to one side point that the Gang of Four is still the base line where you start your journey towards developing your design skills .A good amount of your system design ideas still come from there .It is only after you decide to make your software internet scale you get into issues of guarantees , ,consistency and latency the 3 main pillars that will then shape your journey .

Books recommended

So here are the books i recommend ,in no order .

1. Gang of four design pattern

2. Cloud Native Architectures ,pckt pub

3 The Architecture of Open Source Application ( they have a website or get the book)

4. Architecting for Scale , Oreily , this is one good book that teaches you cloud sans the sales and marketing fluff when you do vendor cloud certifications

5. Fundamentals of Software Architecture , this is one wisdom style book that tells u about the roles ,decision making process and popular architecture choices

6 Designing Data-Intensive Applications , little dated but it has good coverage on databases and big data systems .One stop .

7. Alex Xu‘s book System design interview . Though this is titled as interview book .It is one good book that has excellent breadth . If you go with the theme of my post , this could be your final book .

One last thing .There are so many other topics and books that one need to read .Fowlers book on Refactoring and Enterprise integration .Eric evans book on DDD. Pini Rizniks book on cloud native transformation .Bellmeres book on event driven microservices .Sam newmans book on microservices . Accelerate on CICD .Even SICP by mitpress.

But all of these good books that you should pick up when you know the landscape well else they will confuse you as practitioner.

In the end system design is all about interviews .When it comes to your project , your problems wont have to fit the text book (which is when you should reach to specialist book or your mentors ) .

Here is also a detailed skill matrix for you to plan your growth https://docs.google.com/spreadsheets/d/1lAFfBj7UM3NZrS3ywsHZsK4pnWN5eWZJCeSt-UtQyu0/edit?usp=sharing

Good luck